The global AI server CPU market is undergoing a transformative phase, driven by explosive demand for generative AI and cloud-scale infrastructure. According to IDC’s latest report, the market is projected to grow from $125.1 billion in 2024 to $158.7 billion in 2025, with a compound annual growth rate (CAGR) of 18.8% through 2028. This surge is fueled by enterprises and hyperscalers investing in AI training and inference workloads, such as large language models (LLMs) and autonomous systems.

Industry Giants Compete for Market Leadership

Intel and AMD are locked in a fierce rivalry to capture this growing market. Intel’s 3rd Gen Xeon Scalable processors, featuring built-in AI acceleration via Intel DL Boost and AMX (Advanced Matrix Extensions), have demonstrated up to 25x better inference performance than AMD EPYC 7763 in object detection tasks. The recently launched Xeon 6 Performance-Core processors, with 128 cores and 504MB L3 cache, further

Meanwhile, AMD is leveraging its EPYC (霄龙) 9005 series, including the 192-core EPYC 9965, to challenge Intel. These processors offer 6400 MT/s DDR5 memory and 384MB L3 cache, optimized for AI workloads like natural language processing (NLP) and high-performance computing (HPC). AMD claims its 5th Gen EPYC “Turin” processors will deliver 5.4x faster performance than Intel’s Xeon Platinum 8592+ in Llama 2-based chatbot tasks, though Intel disputes these benchmarks.

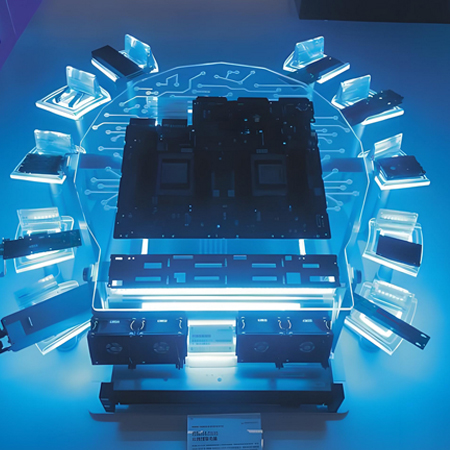

NVIDIA, while primarily a GPU leader, is expanding its CPU footprint through partnerships. Its DGX-2 servers, powered by 16 V100 GPUs and Xeon CPUs, enable 2 petaFLOPS of AI training performance. Additionally, NVIDIA’s Graviton processors, built on Arm architecture, are gaining traction in cloud AI infrastructure, offering energy efficiency and cost savings.

Market Dynamics and Regional Growth

The AI server CPU market is being reshaped by generative AI applications. IDC reports that generative AI servers will account for 29.6% of the market in 2025, rising to 37.7% by 2028. Hyperscalers like AWS, Azure, and Alibaba are investing heavily in custom chips, such as Alibaba’s Yitian 710—a 128-core Arm-based CPU optimized for cloud AI workloads.

Regionally, North America leads with a 104.1% growth in AI server spending in 2024, while China’s smart computing power surged 74.1% year-over-year. Japan plans to invest $468 million in an AI supercomputing center with 10,000 NVIDIA GPUs, underscoring Asia’s commitment to AI infrastructure.

Technological Innovations and Challenges

Key innovations include:

Energy Efficiency**: AMD’s EPYC processors achieve 30% power savings in AI workloads compared to previous generations, while Intel’s Xeon 6 series supports 8800 MT/s MRDIMM memory for improved data throughput.

Security**: Intel’s Software Guard Extensions (SGX) and AMD’s Infinity Guard protect AI workloads from cyber threats.

Scalability: The adoption of CXL 2.0 and PCIe 5.0 enables seamless integration of GPUs and accelerators, as seen in NVIDIA’s DGX-2 platform.

However, challenges persist, including supply chain constraints and the need for advanced cooling solutions. For example, Dataxet, a media analytics firm, reduced CPU utilization by 70% using Huawei Cloud’s serverless architecture and AI-optimized CPUs.

The Road

As AI becomes ubiquitous in healthcare, finance, and manufacturing, the demand for specialized CPUs will intensify. Intel and AMD are racing to optimize their products for emerging workloads, while NVIDIA and Chinese players like Huawei (with its Ascend 910B AI chip) are disrupting the status quo.

Gartner predicts AI servers will dominate 70% of the global server market by 2028, highlighting the critical role of CPUs in powering the next wave of AI innovation. With generative AI driving unprecedented compute needs, the battle for AI server CPU supremacy is set to redefine the semiconductor landscape.